The What, When, Why, and How of Formative Evaluation of Instruction

By M. Andrew Young

By M. Andrew Young

Hello! My name is M. Andrew Young. I am a second-year Ph.D. student in the Evaluation, Statistics, and Methodology Ph.D. program here at UT-Knoxville. I currently work in higher education assessment as a Director of Assessment at East Tennessee State University’s college of Pharmacy. As part of my duties, I am frequently called upon to conduct classroom assessments.

Higher education assessment often employs the usage of summative evaluation of instruction, also commonly known as course evaluations, summative assessment of instruction (SAI), summative evaluation of instruction (SEI), among other titles, at the end of a course. At my institution the purpose of summative evaluation of instruction is primarily centered on evaluating faculty for tenure, promotion, and retention. What if there were a more student-centered approach to getting classroom evaluation feedback that not only benefits students in future classes (like summative assessment does), but also benefits students currently enrolled in the class? Enter formative evaluation of instruction, (FEI).

What is FEI?

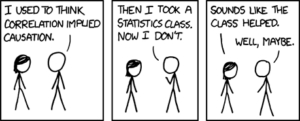

FEI, sometimes referred to as midterm evaluations, entails seeking feedback from students prior to the semester midpoint to make mid-stream changes that will address each cohort’s individual learning needs. Collecting such meaningful and actionable FEI can prove to be challenging. Sometimes faculty may prefer to not participate in formative evaluation because they do not find the feedback from students actionable, or they may not value the student input. Furthermore, there is little direction on how to conduct this feedback and how to use it for continual quality improvement in the classroom. While there exists a lot of literature on summative evaluation of teaching, there seems to be a dearth of research surrounding best practices for formative evaluation of teaching. The few articles that I have been able to discover offer suggestions for FEI covered later in this post.

When Should We Use FEI?

In my opinion, every classroom can benefit from formative evaluation. When to administer it is as much an art as it is a science. Timing is everything and the results can differ greatly depending on the timing of the administration of the evaluation. In my time working as a Director of Assessment, I have found that the most meaningful feedback can be gathered in the first half of the semester, directly after a major assessment. Students have a better understanding of their comprehension of the material and the effectiveness of the classroom instruction. There is very little literature to support this, so this is purely anecdotal. None of the resources I have found have prescribed precisely when FEI should be conducted, but the name implies that the feedback should be sought at or around the semester midpoint.

Why Should We Conduct FEI?

FEI Can Help:

- Improve student satisfaction on summative feedback of instruction (Snooks et al., 2007; Veeck et al., 2016),

- Make substantive changes to the classroom experience including textbooks, examinations/assessments of learning, and instructional methods (Snooks et al., 2007; Taylor et al., 2020)

- Strengthen teaching and improving rapport between students and faculty (Snooks et al., 2007; Taylor et al., 2020)

- Improve faculty development including promotion and tenure (Taylor et al., 2020; Veeck et al., 2016), encouraging active learning (Taylor et al., 2020)

- Bolster communication of expectations in a reciprocal relationship between instructor and student (Snooks et al., 2007; Taylor et al., 2020).

How Should We Administer the FEI?

Research has provided a wide variety of suggested practices including, but not limited to involving a facilitator for the formative evaluation, asking open-ended questions, using no more than ten minutes of classroom time, keeping it anonymous, and keeping it short (Holt & Moore, 1992; Snooks et al., 2007; Taylor et al., 2020), and even having students work in groups to provide the feedback or student conferencing (Fluckiger et al., 2010; Veeck et al., 2016).

Hanover (2022) concluded that formative evaluation should include elements of: a 7-point Likert scale question evaluating how the course is going for the student followed by an open-ended explanation of rating question, involving the “Keep, Stop, Start” model with open-ended response-style questions, and finally, open-ended questions that allow students to suggest changes and provide additional feedback on the course and/or instructor. The “Keep, Stop, Start” model is applied by asking students what they would like the instructors to keep doing, stop doing, and/or start doing. In the college of pharmacy, we use the method that Hanover presented where we ask students to self-evaluate how well they feel they are doing in the class, and then explain their rating with an open-ended, free-response field. This has only been in practice at the college of pharmacy for the past academic year, and anecdotally from conversation with faculty, the data that has been collected has generally been more actionable for the faculty. Like all evaluations, it is not a perfect system and sometimes some of the data is not actionable, but in our college FEI is an integral part of indirect classroom assessment. The purpose is to collect and analyze themes that are associated with the different levels of evaluation rating. (Best Practices in Designing Course Evaluations, 2022). The most important step, however, is to close the feedback loop in a timely manner (Fluckiger et al., 2010; Taylor et al., 2020; Veeck et al., 2016). Closing the feedback loop for our purposes is essentially asking the course coordinator to respond to the feedback given in the FEI, usually within a week’s time, and detailing what changes, if any, will be made in the classroom and learning environment. Obviously, not all feedback is actionable, and in some cases, best practices in the literature conflict with suggestions made, but it is important for the students to know what can be changed and what cannot/will not be changed and why.

What Remains?

Some accrediting bodies (like the American Council for Pharmacy Education, or ACPE), require colleges to have an avenue for formative student feedback as part of their standards. I believe that formative evaluation benefits students and faculty alike, and where it may be too early to make a sweeping change and require FEI for every higher education institution, there may be value in educating faculty and assessment professionals of the benefits of FEI. Although outside the scope of this short blog post, adopting FEI as a common practice should be approached carefully, intentionally, and with best practices for change management in organizations. Some final thoughts: in order to get the students engaged in providing good feedback, ideally the practice of FEI has to be championed by the faculty. While it could be mandated by administration, that practice would likely not engender as much buy-in, and if the faculty, who are the primary touch-points for the students, aren’t sold on the practice or participate begrudgingly, that will create an environment where the data collected is not optimal and/or actionable. Students talk with each other across cohorts. If students in upper classes have a negative opinion on the process, that will have a negative trickle-down effect. What is the best way to make students disengage? Don’t close the feedback loop.

References and Resources

Best Practices in Designing Course Evaluations. (2022). Hanover Research.

Fluckiger, J., Tixier, Y., Pasco, R., & Danielson, K. (2010). Formative Feedback: Involving Students as Partners in Assessment to Enhance Learning. College Teaching, 58, 136–140. https://doi.org/10.1080/87567555.2010.484031

Holt, M. E., & Moore, A. B. (1992). Checking Halfway: The Value of Midterm Course Evaluation. Evaluation Practice, 13(1), 47–50.

Snooks, M. K., Neeley, S. E., & Revere, L. (2007). Midterm Student Feedback: Results of a Pilot Study. Journal on Excellence in College Teaching, 18(3), 55–73.

Taylor, R. L., Knorr, K., Ogrodnik, M., & Sinclair, P. (2020). Seven principles for good practice in midterm student feedback. International Journal for Academic Development, 25(4), 350–362.

Veeck, A., O’Reilly, K., MacMillan, A., & Yu, H. (2016). The Use of Collaborative Midterm Student Evaluations to Provide Actionable Results. Journal of Marketing Education, 38(3), 157–169. https://doi.org/10.1177/0273475315619652